Asymptotic Analysis Exercises - Java

- Alex Ricciardi

- Sep 18, 2024

- 15 min read

This article showcases asymptotic analyses evaluating algorithms' time complexity using Big-Oh notation through a series of Java-based programming problems.

Alexander S. Ricciardi

September 1st, 2024

This documentation is part of the Critical Thinking 3 Assignment from CSC400: Data Structures and Algorithms at Colorado State University Global. It consists of a series of exercises designed to demonstrate the principles of asymptotic analysis. Asymptotic analysis uses the Big-Oh notation.

“In computer science, the Big-Oh notation is used to describe the time complexity or space complexity of algorithms (Geeks for Geeks, 2024). Mathematically, it defines the upper bound of an algorithm’s growth rate, known as the asymptotic upper bound, and is denoted as 𝑓(𝑛) is 𝑂(𝑔(𝑛)) or 𝑓(𝑛) ∈ 𝑂(𝑔(𝑛)), pronounced 𝑓(𝑛) is Big-Oh of 𝑔(𝑛). The term "asymptotic" refers to the behavior of the function as its input size 𝑛 approaches infinity. In the context of computer science, it describes the worst-case scenario for time complexity or space complexity. For example, an algorithm with 𝑂(𝑛²) time complexity will grow much faster than one with 𝑂(𝑛) as the input size increases, with n representing the number of primitive operations. Primitive operations are low-level instructions with a constant execution time.” (Ricciardi, 2024. Post)

Definition of Big-Oh:

Let f(n) and g(n) be functions mapping positive integers to positive real numbers. We say that f(n) ∈ O(g(n)) if there is a real constant c > 0 and an integer constant n0 ≥ 1 such that

f(n) ≤ c⋅g(n) , for n ≥ n0

(Carrano & Henry, 2018)

The Assignment Direction: Complete the following exercises. For each exercise, show your work and all the steps taken to determine the Big-Oh for each problem. Partial points cannot be awarded without showing work.

Exercise 1

What is the Big-Oh of the following computation?

int sum = 0;

for (int counter = n; counter > 0; counter = counter - 2)

sum = sum + counter;Exercise 2

Suppose your implementation of a particular algorithm appears in Java as follows:

for (int pass = 1; pass <= n; pass++)

{

for(int index = 0; index < n; index++)

{

for(int count = 1; count < 10; count++)

{

. . .

} //end for

} // end for

} //end forThe algorithm involves an array of "n" items. The previous code shows only the repetition in the algorithm, but it does not show the computations that occur within the loops.Those computations, however, are independent of "n." What is the order of the algorithm?

Exercise 3

Consider two programs, A and B. Program A requires 1000 x n^2 operations and Program B requires 2n operations.

For which values of n will Program A execute faster than Program B?

Exercise 4

Consider an array of length "n" containing unique integers in random order and in the range 1 to n + 1.

For example, an array of length 5 would contain 5 unique integers selected randomly from the integers 1 through 6. Thus the array might contain 3 6 5 1 4. Of the integers 1 through 6, notice that 2 was not selected and is not in the array.

Write Java code that finds the integer that does not appear in such an array.

Explain the Big-Oh in your code.

My notes:

- Each exercise starts on a new page.

- 𝑔(𝑛) is the number of primitive operations.

- The summation properties of a constant

Exercise 1

What is the Big-Oh of the following computation?

int sum = 0;

for (int counter = n; counter > 0; counter = counter - 2)

sum = sum + counter;In this exercise 𝑔(𝑛) is the number of primitive operations.

Step 1: Understanding the code

- The ‘sum’ variable initializes to ‘0’.

- The ‘counter’ loop iterates from ‘n’ to ‘1’ inclusive. Note that all the variables andconstants in the loop are integers.

o The ‘counter’ variable initializes to ‘n’.

o The ‘counter’ variable is compared to ‘0’, the loop iterates as long as ‘counter’ is greater than ‘0’.

o The ‘counter’ variable is post-decremented by ‘2’, this means that the ‘counter’ is compared to ‘0’ before being decremented.

o The ‘sum’ variable is incremented by the value of the ‘counter’ variable in eachiteration of the for-loop until the ‘counter’ is less than or equal to ‘0’.

If ‘n = 10’

- 1st iteration ‘counter = 10’ and ‘sum = 0 + 10 = 10’

- 2nd iteration ‘counter = 8’ and ‘sum = 10 + 8 = 18’

- 3rd iteration ‘counter = 6’ and ‘sum = 18 + 6 = 24’

- 4th iteration ‘counter = 4’ and ‘sum = 24 + 4 = 28’

- 5th iteration ‘counter = 2’ and ‘sum = 28 + 2 = 30’

The number of iterations is 𝑛/2

If ‘n = 11’

- 1st iteration ‘counter = 11’ and ‘sum = 0 + 11 = 11’

- 2nd iteration ‘counter = 9’ and ‘sum = 11 + 9 = 20’

- 3rd iteration ‘counter = 7’ and ‘sum = 20 + 7 = 27’

- 4th iteration ‘counter = 5’ and ‘sum = 27 + 5 = 32’

- 5th iteration ‘counter = 3’ and ‘sum = 32 + 3 = 35’

- 6th iteration ‘counter = 1’ and ‘sum = 32 + 1 = 36’

The number of iterations is (𝑛+1)/2

Step 2: Counting the number of iterations

The for-loop will iterate as long as the ‘counter > 0’, the ‘counter is initialized to ‘n’, and it is decremented by ‘2’.

If 𝑘 is the number of iterations, then the last iteration would occur when 𝑛 - 2𝑘 ≤ 0. Solving for 𝑘, 𝑘 ≥ 𝑛/2 . Using the examples above of ‘n = 10’ and ‘n = 11’, we can say that 𝑘 = 𝑛/2 when 𝑛 is even and k = (𝑛+1)/2 when 𝑛 is odd.

Justification:

An odd number is an integer of the form 𝑛 = 2𝑐 + 1, where 𝑐 is an integer.

Then 𝑘 = (2𝑐+1+1)/2 = (2/2) 𝑐 + (2/2) = 𝑐 + 1.

An even number is an integer of the form 𝑛 = 2𝑐, where 𝑐 is an integer.

Then 𝑘 = (2𝑐)/2 = (2/2) 𝑐 = 𝑐

If 𝑐 = 5

Then 𝑛_𝑒𝑣𝑒𝑛 = 2∗5 = 10 and 𝑘_𝑒𝑣𝑒𝑛 = 𝑛_𝑒𝑣𝑒𝑛/2 = 5 = 𝑐

And 𝑛_𝑜𝑑𝑑 = 2∗5+1 = 11 𝑎𝑛𝑑 𝑘_𝑜𝑑𝑑 = (11+1)/2 = 12/2 = 6 = 𝑐+1

Step 3: Number of primitive operations 𝑔(𝑛) per instruction

- The ‘sum’ variable initializes to 0, it is executed only once, 𝑔₁(𝑛) = 1.

- The ‘counter’ variable initializes to n by the for-loop statement this is executed only once, 𝑔₂(𝑛) = 1.

- The ‘counter’ variable is compared to ‘0’, this is executed in each iteration of the loop, and the number of iterations is 𝑘_𝑒𝑣𝑒𝑛 = 𝑛_𝑒𝑣𝑒𝑛/2 , then 𝑔₃(𝑛_𝑒𝑣𝑒𝑛) = 𝑛_𝑒𝑣𝑒𝑛/2; and 𝑘_𝑜𝑑𝑑 = (𝑛_𝑜𝑑𝑑+1)/2, then 𝑔₃(𝑛_𝑜𝑑𝑑) = (𝑛_𝑜𝑑𝑑+1)/2

- The ‘counter’ is post-decremented by ‘2’, this is done after each iteration of the loop and the comparison of ‘counter’ to ‘0’returns true, the number of iterations is 𝑘_𝑒𝑣𝑒𝑛 = 𝑛_𝑒𝑣𝑒𝑛/2, then 𝑔₄(𝑛𝑒𝑣𝑒𝑛) = 𝑛_𝑒𝑣𝑒𝑛/2 ; and 𝑘_𝑜𝑑𝑑 = (𝑛_𝑜𝑑𝑑+1)/2, then 𝑔₄(𝑛_𝑜𝑑𝑑) = (𝑛_𝑜𝑑𝑑+1)/2

- The ‘sum’ variable is incremented by the value of the ‘counter’ variable in each iteration of the for-loop until the ‘counter’ is less than or equal to ‘0’, then the number of times this instruction will be executed is the number of iterations 𝑘_𝑒𝑣𝑒𝑛 = 𝑛_𝑒𝑣𝑒𝑛/2, then 𝑔₅(𝑛_𝑒𝑣𝑒𝑛) = 𝑛_𝑒𝑣𝑒𝑛/2 ; and 𝑘_𝑜𝑑𝑑 = (𝑛_𝑜𝑑𝑑+1)/2, then 𝑔₅(𝑛_𝑜𝑑𝑑) = (𝑛_𝑜𝑑𝑑+1)/2

Step 4: The total of primitive operations

If 𝒏 is even, 𝒌 = 𝒏/𝟐

- 𝑔(𝑛) = 𝑔₁(𝑛) + 𝑔₂(𝑛) + 𝑔₃(𝑛) + 𝑔₄(𝑛) +𝑔₅(𝑛)

- 𝑔(𝑛) = 1 + 1 + 𝑘 + 𝑘 + 𝑘 = 1 + 1 + 𝑛/2 + 𝑛/2 + 𝑛/2

- 𝑔(𝑛) = 2 + (3/2)𝑛

If 𝒏 is odd, 𝒌 = (𝒏-𝟏)/𝟐

- 𝑔(𝑛) = 𝑔₁(𝑛) + 𝑔₂(𝑛) + 𝑔₃(𝑛) + 𝑔₄(𝑛) +𝑔₅(𝑛)

- 𝑔(𝑛) = 1 + 1 + 𝑘 + 𝑘 + 𝑘 = 1 + 1 + (𝑛+1)/2 + (𝑛+1)/2 + (𝑛+1)/2 = 2/2 + 2/2 + 1/2 + 1/2 + 1/2 + 𝑛/2 + 𝑛/2 + 𝑛/2

- 𝑔(𝑛) = 7/2 + (3/2)𝑛

Step 5: The Big-Oh notation

By the definition of the Big-Oh notation, 𝑂(𝑔(𝑛)).

If 𝑛 is even, with 𝑔(𝑛) = 2 + (3/2)𝑛 then the asymptotic complexity is 𝑂(𝑛)

Justification:

2 + (3/2)𝑛 ≤ 𝑐𝑛, for 𝑐 = 4/2 + 3/2 = 7/2 , when 𝑛 ≥ 𝑛₀ = 2 and 𝑛 is even.

If 𝑛 is odd, with 𝑔(𝑛) = 7/2 + (3/2)/𝑛 then the asymptotic complexity is 𝑂(𝑛)

justification:

2 + (3/2)𝑛 ≤ 𝑐𝑛, for 𝑐 = 4/2 + 3/2 = 7/2 , when 𝑛 ≥ 𝑛₀ = 1 and 𝑛 is odd.

When 𝑛 is even Big-Oh is 𝑂(𝑛) and when 𝑛 is even Big-Oh 𝑂(𝑛), thus:

The Big-Oh of the computation is 𝑂(𝑛)

In conclusion, the asymptotic analysis shows that the complexity of the algorithm is directly proportional to the size of the input n, indicating a linear growth rate. O(n) represents a linear type of complexity.

Exercise 2

Suppose your implementation of a particular algorithm appears in Java as follows:

for (int pass = 1; pass <= n; pass++)

{

for(int index = 0; index < n; index++)

{

for(int count = 1; count < 10; count++)

{

. . .

} //end for

} // end for

} //end forThe algorithm involves an array of "n" items. The previous code shows only the repetition in the algorithm, but it does not show the computations that occur within the loops. Those computations, however, are independent of "n." What is the order of the algorithm?

Note that the order of an algorithm refers to its "time complexity" or Big-Oh notation. Below is the time complexity analysis of the code above.

Step 1: Understanding the code

- The outer loop (‘pass’) itenerates from ‘1’ to ‘n’ inclusive. Note that all the variables and constants in the loop are integers.

o The ‘pass’ variable initializes to ‘1’.

o The ‘pass’ variable is compared to ‘n’, the loop iterates as long as ‘pass’ is less than or equal to ‘n’.

o The ‘pass’ variable is post-incremented by ‘1’, this means that the ‘pass' variable is compared to ‘n’ before being incremented.

- The middle loop (‘index’) itenerates from ‘0’ to ‘n’ exclusive. Note that all the variables and constants in the loop are integers.

o The ‘index’ variable initializes to ‘0’.

o The ‘index’ variable is compared to ‘n’, the loop iterates as long as ‘index’ is less than ‘n’.

o The ‘index’ variable is post-incremented by ‘1’, this means that the ‘pass' variable is compared to ‘n’ before being incremented.

- The inner loop (‘count’) itenerates from ‘1’ to ‘10’ exclusive. Note that all the variables and constants in the loop are integers.

o The ‘count’ variable initializes to ‘1’.

o The ‘count’ variable is compared to ‘10’, the loop iterates as long as ‘count’ is less than ‘10’.

o The ‘count’ variable is post-incremented by ‘1’, this means that the ‘count' variable is compared to ‘10’ before being incremented.

Step 2: Counting the number of iterations

- The outer loop will iterate as long as ‘pass’ is less than or equal to ‘n’, and it is post-incremented by ‘1’, starting at ‘pass = 1’.If is the number of iterations:

Thus, the loop iterates 𝒏 times

- The outer middle will iterate as long as the ‘index’ is strictly less than ‘n’. and it is post-incremented by ‘1’, starting at ‘index = 0’.

If is the number of iterations:

Thus, the loop iterates 𝑛 times. However, since the loop is nested within the outer loop, it iterates a total of 𝑛 ∙ 𝑛 = 𝒏² times.

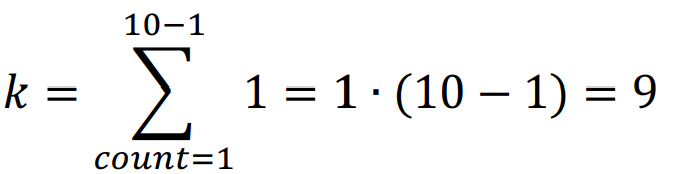

- The inner loop will iterate as long as ‘count’ is strictly less than ‘10’, and it is post-incremented by ‘1’, starting at ‘pass = 1’. If 𝑘 is the number of iterations:

Thus, the loop iterates 9 times. However, since the loop is nested within the middle loop, it iterates a total of 9 ∙ 𝑛² = 𝟗𝒏² times.

Step 3: Number of primitive operations 𝑔(𝑛) per instruction

- The number of primitive operations for the outer loop is:

o The ‘pass’ variable initializes to ‘1’ by the for-loop statement this is executedonly once, then 𝑔₁(𝑛) = 1.

o The ‘pass’ variable is compared to ‘n’, this is executed in each iteration of the loop, and the number of iterations is 𝑘 = 𝑛, then 𝑔₂(𝑛) = 𝑛.

o The ‘pass’ is post-incremented by ‘1’, this is done after each iteration of the loop and the comparison of ‘pass’ to ‘n’ returns true, the number of iterations is 𝑘 = 𝑛, then 𝑔₃(𝑛) = 𝑛.

The total of primitive operations for the outer loop is:

𝑔_𝑜𝑢𝑡𝑒𝑟𝑙𝑜𝑜𝑝(𝑛) = 𝑔₁(𝑛) + 𝑔₂(𝑛) + 𝑔₃(𝑛) = 1 + 𝑛 + 𝑛 = 1 + 2𝑛

𝑔_𝑜𝑢𝑡𝑒𝑟𝑙𝑜𝑜𝑝(𝑛) = 1 + 2𝑛

- The number of primitive operations for the middle loop is:

o The ‘index’ variable initializes to ‘0’ by the for-loop statement this is executed only once. However, since the middle loop is nested within the outer loop, the index’ variable is initialized 𝑛 times, then 𝑔₁(𝑛) = 1 ∙ 𝑛 = 𝑛.

o The ‘index’ variable is compared to ‘n’, this is executed in each iteration of the loop, and the number of iterations is 𝑘 = 𝑛², then 𝑔₂(𝑛) = 𝑛².

o The ‘index’ is post-incremented by ‘1’, this is done after each iteration of the loop and the comparison of ‘index’ to ‘n’ returns true, and the number of iterations is 𝑘 = 𝑛², then 𝑔₃(𝑛) = 𝑛².

The total of primitive operations for the outer loop is:

𝑔_𝑚𝑖𝑑𝑑𝑙𝑒𝑙𝑜𝑜𝑝 (𝑛) = 𝑔₁(𝑛) + 𝑔₂(𝑛) + 𝑔₃(𝑛) = + 𝑛 + 𝑛² + 𝑛² = 𝑛 + 2𝑛²

𝑔_𝑚𝑖𝑑𝑑𝑙𝑒𝑙𝑜𝑜𝑝 (𝑛) = 𝑛 + 2𝑛²

- The number of primitive operations for the inner loop is:

o The ‘count’ variable initializes to ‘1’ by the for-loop statement this is executed only once. However, since the inner loop is nested within the middle loop, the ‘count’ variable is initialized 𝑛2 times, then 𝑔₁(𝑛) = 1 ∙ 𝑛² = 𝑛² .

o The ‘index’ variable is compared to ‘10’, this is executed in each iteration of the loop, and the number of iterations is 𝑘 = 9𝑛² , then 𝑔3(𝑛) = 9𝑛²

o The ‘index’ is post-incremented by ‘1’, this is done after each iteration of the loop and the comparison of ‘index’ to ‘10’ returns true, and the number of iterations is

𝑘 = 9𝑛² , then 𝑔3(𝑛) = 9𝑛² .

The total of primitive operations for the outer loop is:

𝑔_𝑖𝑛𝑛𝑒𝑟𝑙𝑜𝑜𝑝(𝑛) = 𝑔₁(𝑛) + 𝑔₂(𝑛) + 𝑔₃(𝑛) = 𝑛² + 9𝑛² + 9𝑛² = 19𝑛²

𝑔_𝑖𝑛𝑛𝑒𝑟𝑙𝑜𝑜𝑝(𝑛) = 19𝑛²

Step 4: The total of primitive operations done by the loops

- 𝑔_𝑙𝑜𝑜𝑝𝑠(𝑛) = 𝑔_𝑜𝑢𝑡𝑒𝑟𝑙𝑜𝑜𝑝 (𝑛) + 𝑔_𝑚𝑖𝑑𝑑𝑙𝑒𝑙𝑜𝑜𝑝 (𝑛) + 𝑔_𝑖𝑛𝑛𝑒𝑟𝑙𝑜𝑜𝑝 (𝑛)

- 𝑔_𝑙𝑜𝑜𝑝𝑠(𝑛) = 1 + 2𝑛 + 𝑛 + 2𝑛² + 19𝑛²

- 𝑔_𝑙𝑜𝑜𝑝𝑠(𝑛) = 21𝑛² + 3𝑛 + 1

Step 5: The Big-Oh notation

Since the array computation in the inner loop, the only place where computations are done can be considered a constant said we called ‘c’, then the number of times that computation will be is executed is 𝑔_𝑖𝑛𝑛𝑒𝑟𝑐𝑜𝑚𝑝 = 9𝑐𝑛².

With 𝑔_𝑖𝑛𝑛𝑒𝑟𝑐𝑜𝑚𝑝(𝑛) = 9𝑐𝑛² then the asymptotic complexity is 𝑂_𝑖𝑛𝑛𝑒𝑟𝑐𝑜𝑚𝑝(𝑛²).

Justification:

9𝑐𝑛² with c being a constant ≤ (9𝑐)𝑛² = 𝑐𝑛². By the definition of the Big-Oh notation, 𝑂_𝑖𝑛𝑛𝑒𝑟𝑐𝑜𝑚𝑝(𝑛²). In other words, we can ignore the array computations in terms of time complexity analysis because the computations within the inner loop are independent of “n”, meaning that the computations in the inner loop, in the context of the time complexity, are constant and are ignored by the Big-Oh nation.

The time complexity of the loops is 𝑂_𝑙𝑜𝑜𝑝𝑠(𝑛²):

with 𝑔_𝑙𝑜𝑜𝑝𝑠(𝑛) = 21𝑛² + 3𝑛 + 1 then the asymptotic complexity is 𝑂_𝑙𝑜𝑜𝑝𝑠(𝑛²)

Justification:

21𝑛² + 3𝑛 + 1 ≤ (21+3+1)𝑛² = 𝑐𝑛², for 𝑐 = 25, when when 𝑛 ≥ 𝑛₀ = 1.

By the definition of the Big-Oh notation.

The overall complexity of the algorithm is 𝑂(𝑛²)

Justification:

With 𝑂_𝑙𝑜𝑜𝑝s(𝑛²), 𝑔_𝑙𝑜𝑜𝑝s(𝑛) = 𝑛² and 𝑂_𝑖𝑛𝑛𝑒𝑟𝑐𝑜𝑚𝑝(𝑛²), 𝑔_𝑖𝑛𝑛𝑒𝑟𝑐𝑜𝑚𝑝(𝑛) = 𝑛²

then 𝑛² + 𝑛² = 2𝑛² = 𝑐𝑛², for c=2. By the definition of the Big-Oh notation, the overall complexity of the algorithm is 𝑂(𝑛²) .

Therefore

The order of the algorithm is 𝑂(𝑛²)

In conclusion, the computations within the inner loop are independent of the input ‘n’, meaning that the computation in inner loop, in the context of the time complexity is constant. Since this is the only place where the actual computations occur, we can ignore it in terms of time complexity analysis. The order of the algorithm is 𝑂(𝑛²) indicating that time complexity is quadratic. 𝑂(𝑛²) is a quadric type time complexity.

Exercise 3

Consider two programs, A and B. Program A requires 1000 x 𝑛² operations and Program B requires 2n operations.

For which values of n will Program A execute faster than Program B?

The number of operations for program A is 𝑔_𝐴(𝑛) = 1000𝑛² where 𝑛 is the number of inputs. The number of operations for program B is 𝑔_𝐵(𝑛) = 2𝑛 where 𝑛 is the number of inputs.

When comparing algorithms a primitive operation corresponds to 1 unit of time, 𝑔𝐴(𝑛) and 𝑔𝐵(𝑛) can be defined as a growth rate where the out is the duration and the input is the number of operations ‘n’. Therefore to find the ‘n’ values where Program A executes faster than Program B, we need to identify where 𝑔_𝐴(𝑛) < 𝑔_𝐵(𝑛).

𝑔_𝐴(𝑛) < 𝑔_𝐵(𝑛)

1000𝑛² < 2𝑛

𝑛²/𝑛 < 2/1000

𝑛 < 0.002

The values of ‘n’ where Program A executes faster than Program B are between -∞ and 0.002 excluded, (-∞,0.002). However, operations can be defined only as whole numbers or integers, ‘n’ the number of operations can be a decimal, it can be defined only as an integer Therefore, Therefore, Program A will never run faster than Program B.

Another method that provides a more accurate representation of the relationship is to use the time complexity of the algorithms to compare the algorithms.

The time complexity of Program A is 𝑂_𝐴(𝑛²)

Justification:

1000𝑛² ≤ (1000)𝑛² = 𝑐𝑛², for 𝑐 = 1000, when 𝑛 ≥ 𝑛₀ = 1

The time complexity of Program B is 𝑂_𝐴(𝑛)

Justification:

2𝑛 ≤ (2)𝑛 = 𝑐𝑛, for 𝑐 = 2, when 𝑛 ≥ 𝑛₀ = 1

To find the value of ‘n’ where Program A executes faster than Program B, we need to identify where 𝑂_𝐴(𝑛²) < 𝑂_𝐵(𝑛).

𝑛² < 𝑛 => 𝑛² 𝑛 < 1 => 𝑛 < 1

this is not possible when ‘n’ is an integer and 𝑛 ≥ 𝑛₀ = 1

No values of ‘n’ exist where Program A executes faster than Program B.

Exercise 4

Consider an array of length "n" containing unique integers in random order and in the range 1 to n + 1.

For example, an array of length 5 would contain 5 unique integers selected randomly from the integers 1 through 6. Thus the array might contain 3 6 5 1 4. Of the integers 1 through 6, notice that 2 was not selected and is not in the array.

Write Java code that finds the integer that does not appear in such an array. Explain the Big-Oh in your code.

Note that the expected sum of integers in array length from 1 to 𝑛 + 1 is:

The Java code:

/**

* Finds the missing number

*

* @param array The array of unique integers with one integer missing.

* @param n The length of the array

* @return The missing integer

*/

public static int missingNum(int[] array, int n) {

// The expected sum of integers from 1 to n+1

int expectedSum = (n + 1) * (n + 2) / 2;

int actualSum = 0;

// The actual sum of the numbers in the array

for (int i = 0; i < n; i++) {

actualSum += array[i];

}

return expectedSum - actualSum;

}

Step 1: Understanding the code

- The ‘expectedSum’ variable initializes to ‘(n + 1) * (n + 2) / 2’.

- The ‘actualSum’ variable initializes to ‘0’.

- The ‘array’ loop iterates through from the ‘0’ to the ‘n’ exclusive. Note that all the variables and constants in the loop are integers.

o The ‘i’ variable initializes to ‘0’.

o The ‘i’ variable is compared to ‘n’, the loop iterates as long as ‘i’ is lesse than ‘n’.

o The ‘i’ variable is incremented by ‘1’, this means that the ‘i’ is compared to ‘n’ before being incremented.

o The ‘atualSum’ variable is incremented by the value of the ‘arrau[i]’ variable in each iteration of the for-loop until the ‘i’ is equal to or more than ‘n’.

- The function returns ‘expectedSum – actualSum’

Step 2: Counting the number of iterations in the array loop

- The array loop will iterate as long as ‘i’ is less ‘n’, and it is post-incremented by ‘1’, starting at ‘i = 0’.

If is the number of iterations:

Thus, the loop iterates 𝑛 times.

Step 3: Number of primitive operations 𝑔(𝑛) per instruction

- The ‘expectedSum’ variable initializes to ‘0’, it is executed only once, 𝑔₁(𝑛) = 1.

- The ‘actualSum’ variable initializes to ‘‘(n + 1) * (n + 2) / 2’, it is executed only once,

𝑔₂(𝑛) = 1.

- The number of primitive operations for the array loop is:

o The ‘i’ variable initializes to ‘0’ by the for-loop statement this is executed only once, 𝑔₃(𝑛) = 1.

o The ‘i’ variable is compared to ‘n’, this is executed in each iteration of the loop, and the number of iterations is 𝑘 = 𝑛, then 𝑔₄(𝑛) = 𝑛.

o The ‘i’ is post-incremented by ‘1’, this is done after each iteration of the loop and the comparison of ‘pass’ to ‘n’ returns true, the number of iterations is 𝑘 = 𝑛, then 𝑔₅(𝑛) = 𝑛.

o The ‘actualSum’ is incremented with the value of ‘array[i]’ variable in each iteration of the for-loop until the ‘i' is equal to or more than ‘n’. The number of times this instruction will be executed is the number of iterations k = n, then 𝑔₆(𝑛) = 𝑛 The total of primitive operations for the outer loop is:

𝑔_𝑙𝑜𝑜𝑝(𝑛) = 𝑔₃(𝑛) + 𝑔₄(𝑛) +𝑔₅(𝑛) + 𝑔₆(𝑛) = 1 + 𝑛 + 𝑛 + 𝑛 = 1 + 3𝑛

𝑔_𝑙𝑜𝑜𝑝(𝑛) = 1 + 3𝑛

- The function returns ‘expectedSum – actualSum’, this instruction is performed only once, the 𝑔₇(𝑛) = 1

Step 4: The total of primitive operations

𝑔(𝑛) = 𝑔₁(𝑛) + 𝑔₂(𝑛) + 𝑔_𝑙𝑜𝑜𝑝(𝑛) + 𝑔₇(𝑛)

𝑔(𝑛) = 1 + 1 + 1 + 3𝑛 + 1 = 4 + 3𝑛

𝑔(𝑛) = 4 + 3𝑛

Step 5: The Big-Oh notation The Big-Oh notation is O(n).

Justification:

4 + 3𝑛 ≤ (4 + 3)𝑛 = 𝑐𝑛, for 𝑐 = 7, when 𝑛 ≥ 𝑛0 = 1. By the definition of the Big-Oh notation, the overall complexity of the code is 𝑂(𝑛) .

The Big-Oh notation of my code is 𝑂(𝑛).

The Big-Oh notation 𝑂(𝑛) in my code indicates that the complexity of the code grows in a 1-to-1 proportional relationship with the size of the input, ‘n’, which is the size of the ‘actualArray’ array. In other words, the complexity of the code grows linearly with the size of the array ‘n’, this is known as linear complexity. For example, in terms of time complexity, as the size of the array gets larger, the time it takes to run the code also increases proportionally in 1-to-1 relationship. This is because the duration that it takes to iterate through each element in the loop is the most time-consuming part of the code and all other primitive operations are ignored by the Big-Oh notation, due to their minimal impact on the overall complexity of the code.

Comments